Zeliang Zhang

|

I am a PhD student in the Department of Computer Science at the University of Rochester, advised by Prof. Chenliang Xu. I received my B.Eng. from CS Department, Huazhong University of Science and Technology in 2022. In my undergrad, I worked with Prof. Kun He and Mr. Xiaosen Wang at HUST on adversarial machine learning. I also work closely with Prof. Yijie Peng at PKU on gradient estimation (Zeroth-Order optimization), Prof. Xiao-Yang Liu at RPI/Columbia on high-performance quantum and tensor computation, and Dr. Xiaodong Liu at Microsoft Research on efficient LLM. I am good at playing Erhu and familiar with Violin. Welcome to reach out to chat:)

|

|

|

|

| [5/2025] | I will work as a research intern at the deep learning group of Microsoft Research, Redmond. |

| [4/2025] | !!! Welcome to join our workshop at ICCV25@hawaii on Oct. 20!!! This is the workshop site. |

| [5/2024] | I will work as a research intern at the deep learning group of Microsoft Research, Redmond. |

| [1/2024] | I will work as an Erhu performer at the Traditional Chinese Ensemble Group of Rochester. |

| [10/2021] | I will work as a research intern at the machine learning for sustainability group of Microsoft Research Asia, Beijing. |

|

|

|

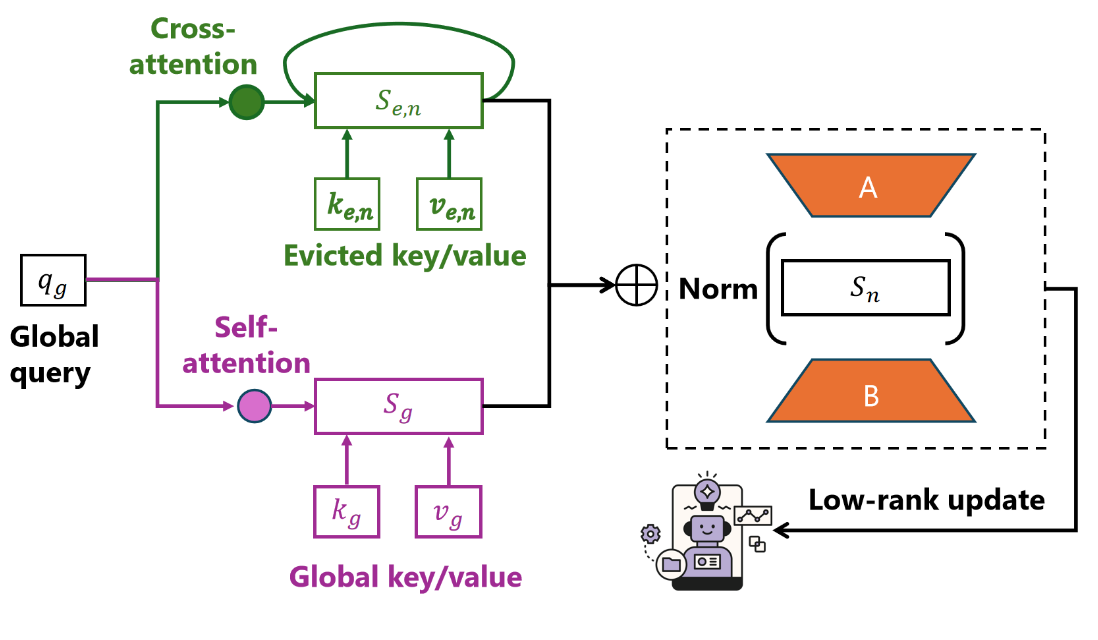

Zeliang Zhang, Xiaodong Liu, Hao Cheng, Hao Sun, Chenliang Xu, Jianfeng Gao. ICLR, 2026 We propose a parameter-efficient method to post-train the LLM to improve the long-context reasoning ability under limited memory. |

|

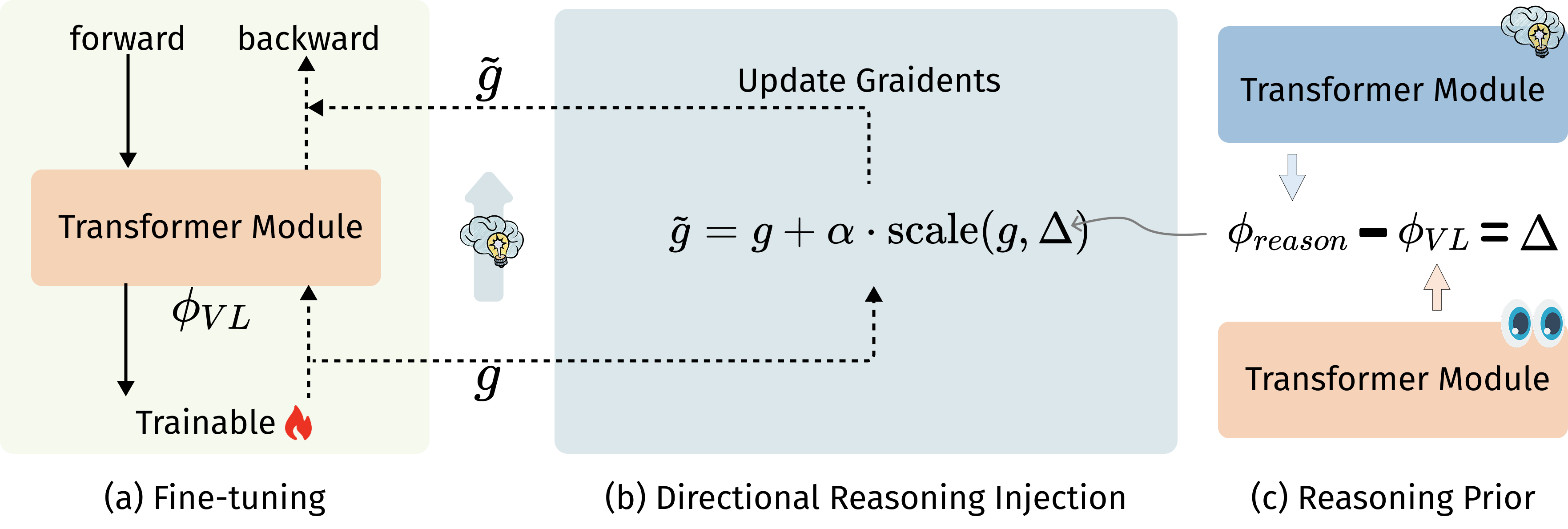

Chao Huang, Zeliang Zhang, Jiang Liu, Ximeng Sun, Jialian Wu, Xiaodong Yu, Ze Wang, Chenliang Xu, Emad Barsoum, Zicheng Liu. Arxiv, 2025 DRIFT transfers reasoning from DeepSeek-R1 into QwenVL via gradient-space guidance, improving multimodal reasoning without destabilizing alignment or expensive RL. |

|

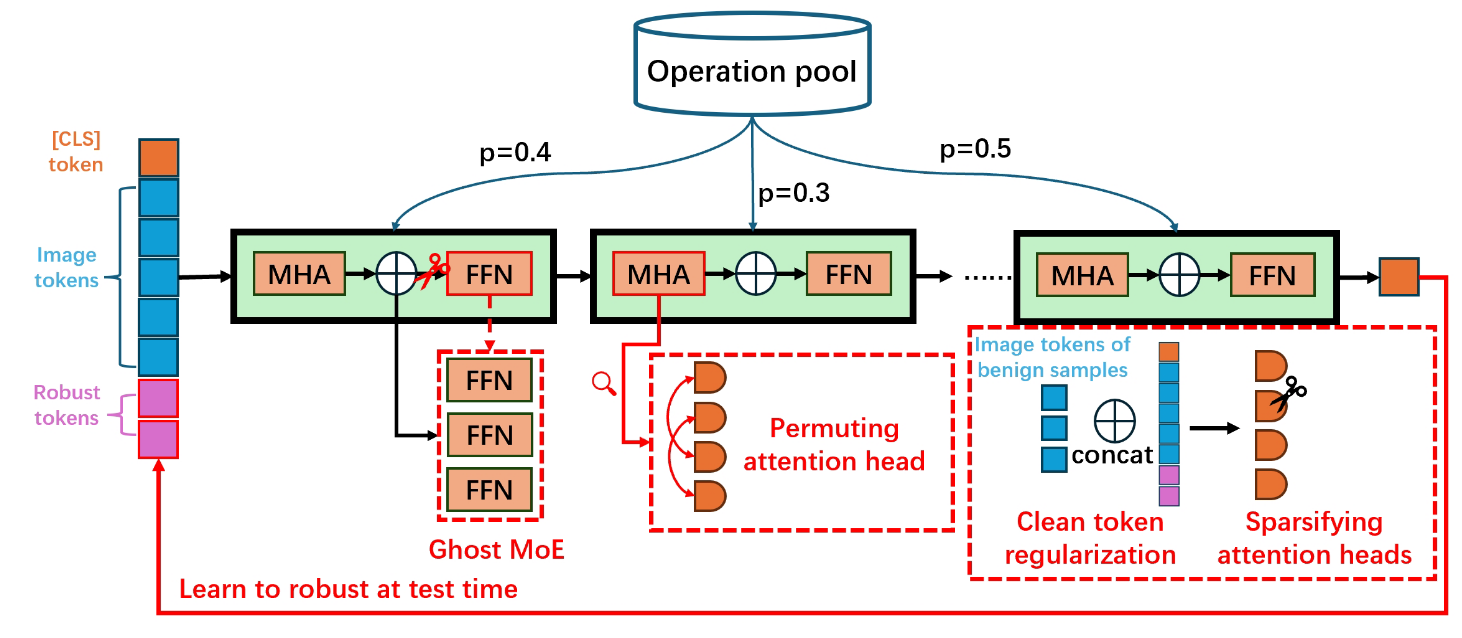

Jiani Liu*, Zhiyuan Wang*, ‡Zeliang Zhang*, Chao Huang, Susan Liang, Yunlong Tang, Chenliang Xu. NeurIPS, 2025 We propose a bag of tricks to boost the adversarial transferability of ViT-based attacks. |

|

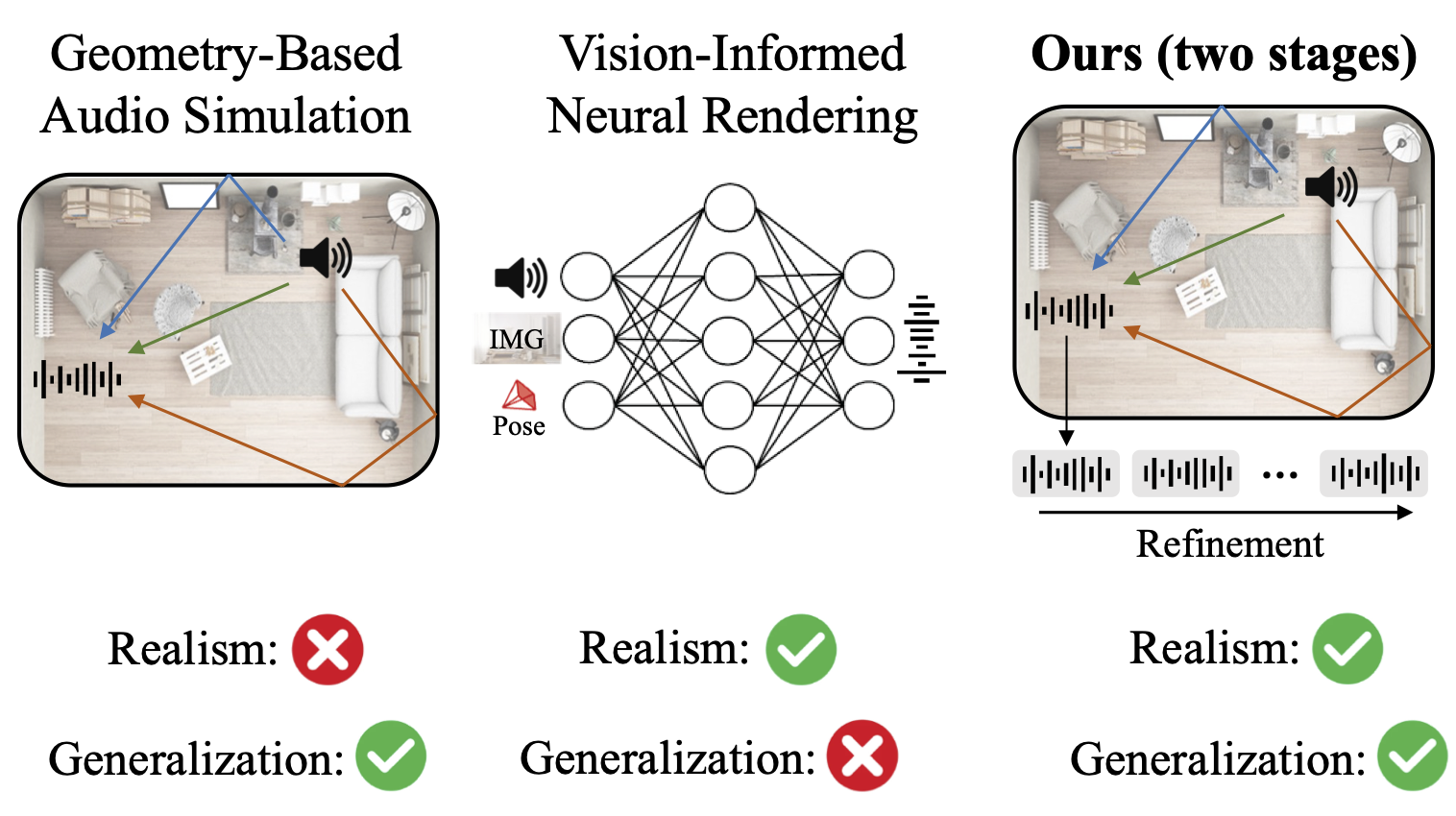

Susan Liang, Chao Huang, Yunlong Tang, Zeliang Zhang, Chenliang Xu. ICCV, 2025 We propose a novel method to boost audio-visual nerf. |

|

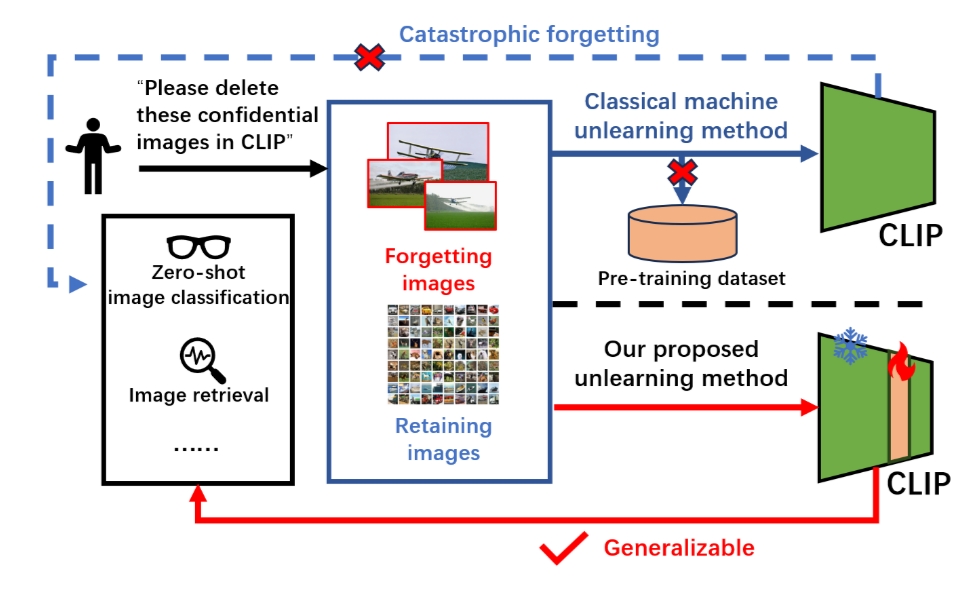

Zeliang Zhang, Gaowen Liu, Charles Fleming, Ramana Rao Kompella, Chenliang Xu. CVPR, 2025 We propose a novel method to unlearn the CLIP on a subgroup of images. |

|

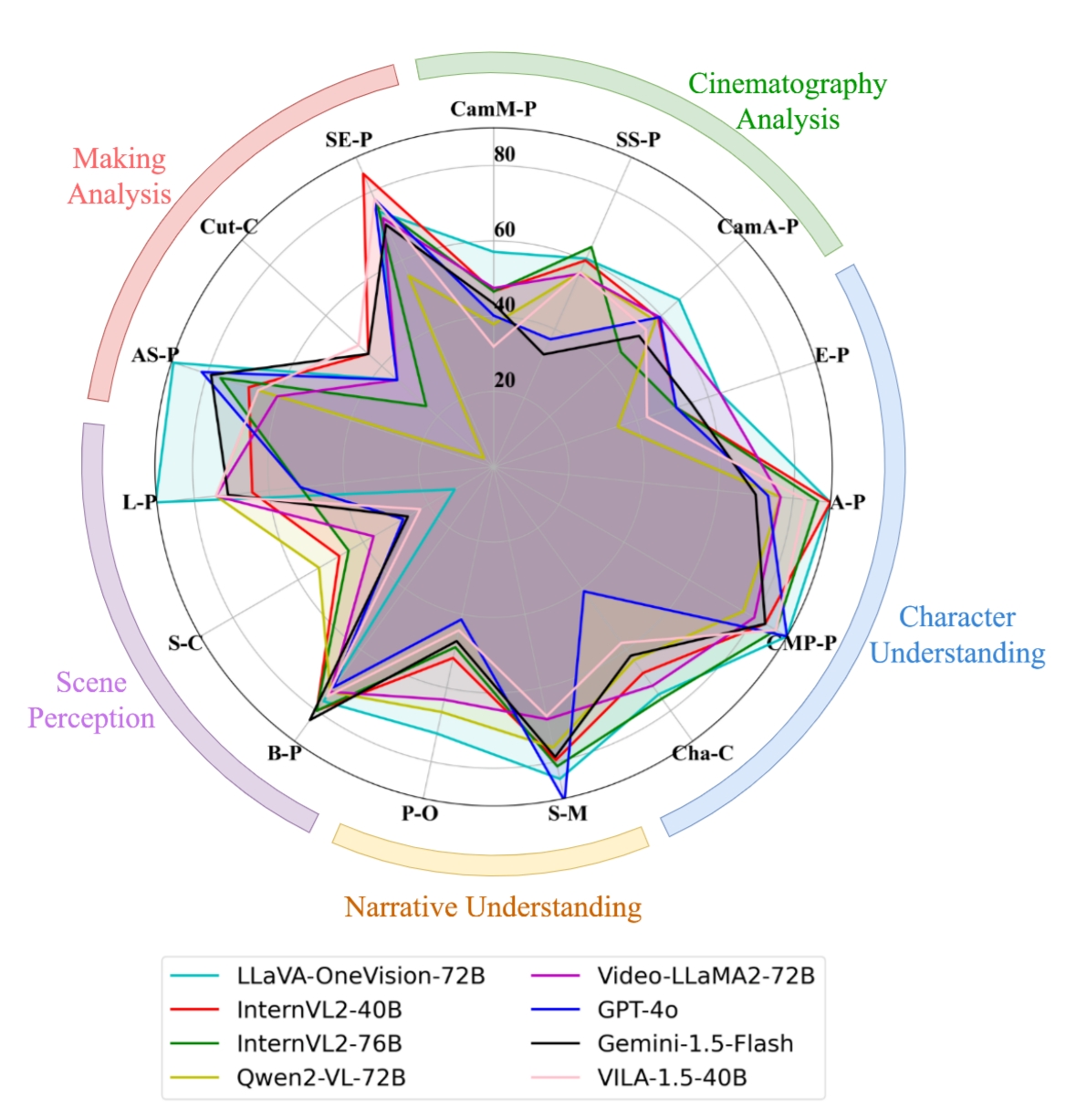

Yunlong Tang, Junjia Guo, Hang Hua, Susan Liang, Mingqian Feng, Xinyang Li, Rui Mao, Chao Huang, Jing Bi, Zeliang Zhang, Pooyan Fazli, Chenliang Xu. CVPR, 2025 We propose a new benchmark specifically designed to evaluate the video composition understanding capabilities of MLLMs. |

|

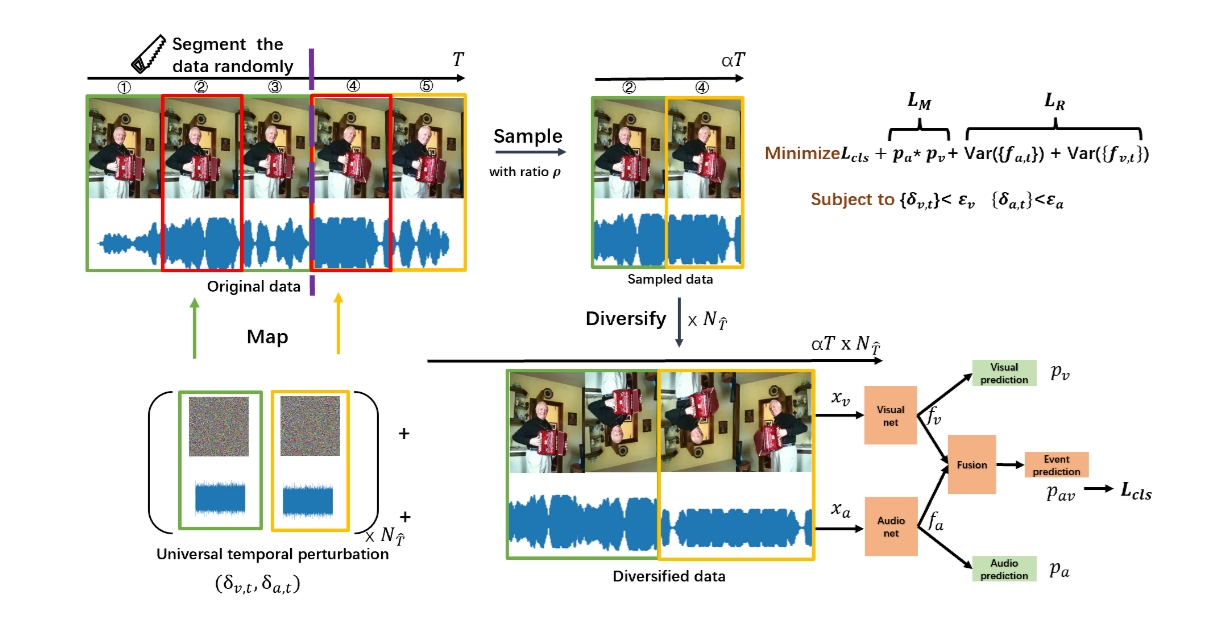

‡Zeliang Zhang*, Susan Liang*, Daiki Shimada, Chenliang Xu. ICLR, 2025 We propose a powerful audio-visual adversarial attack and adversarial training defense method. |

|

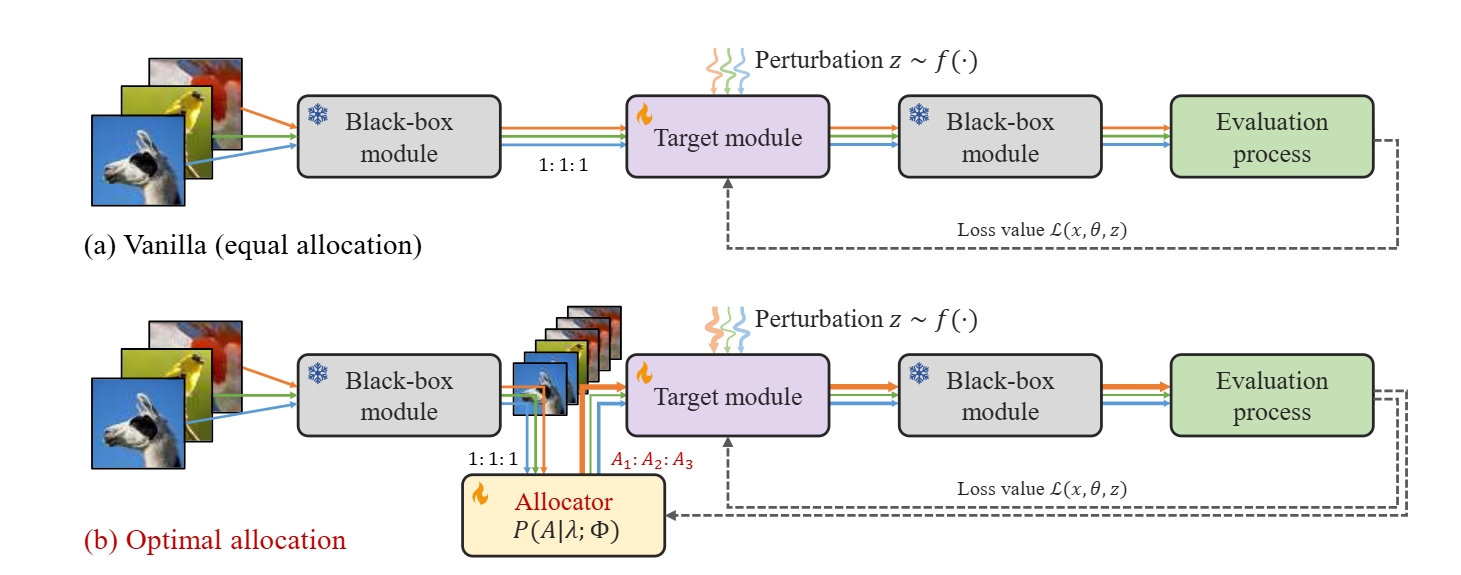

Tao Ren, Zishi Zhang, Jinyang Jiang, Guanghao Li, Zeliang Zhang, Mingqian Feng, Yijie Peng. ICLR, 2025 We propose to allocate the optimal number of queries during the forward-only training to balance estimation accuracy and computational efficiency. |

|

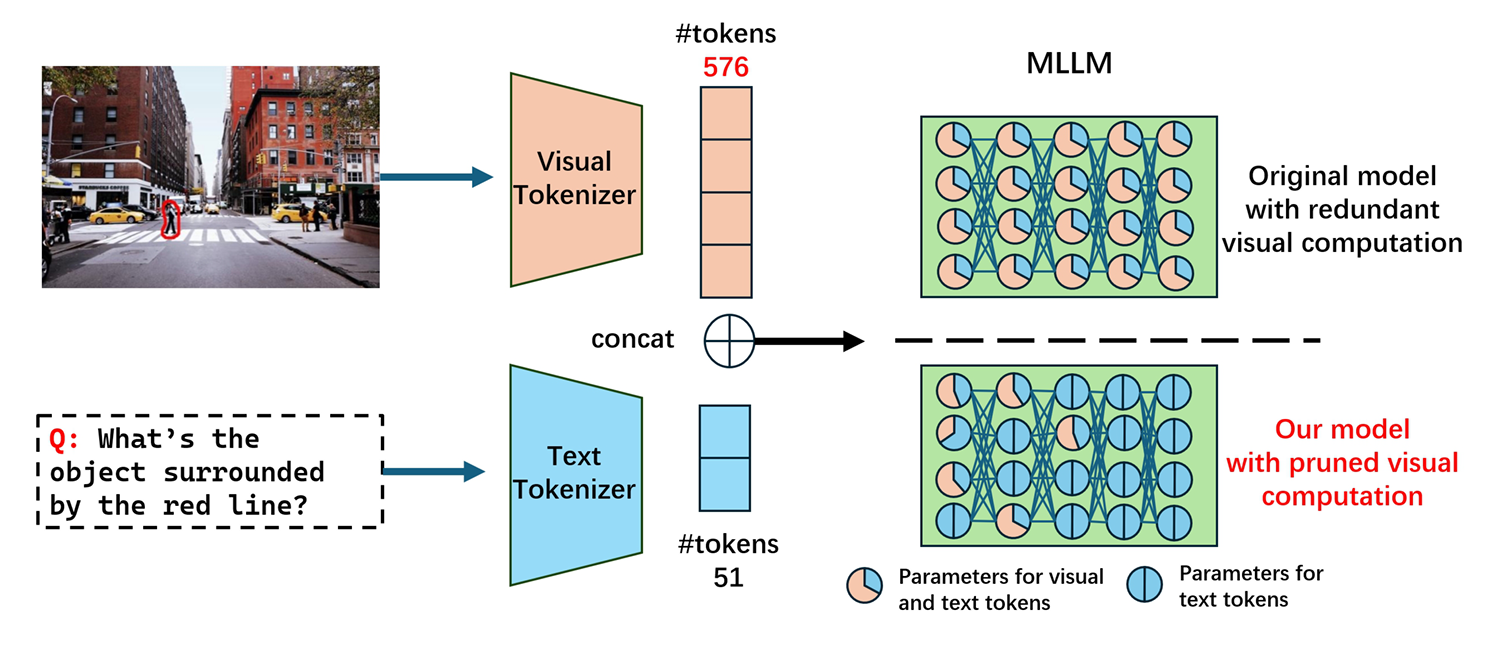

Zeliang Zhang*, Phu Pham*, ‡Wentian Zhao*, ‡Kun Wan*, Yu-Jhe Li, Daniel Miranda, Ajinkya Kale, Chenliang Xu. Preprint, 2024 We prune the visual-related computation in multiple MLLMs to accelerate the inference. |

|

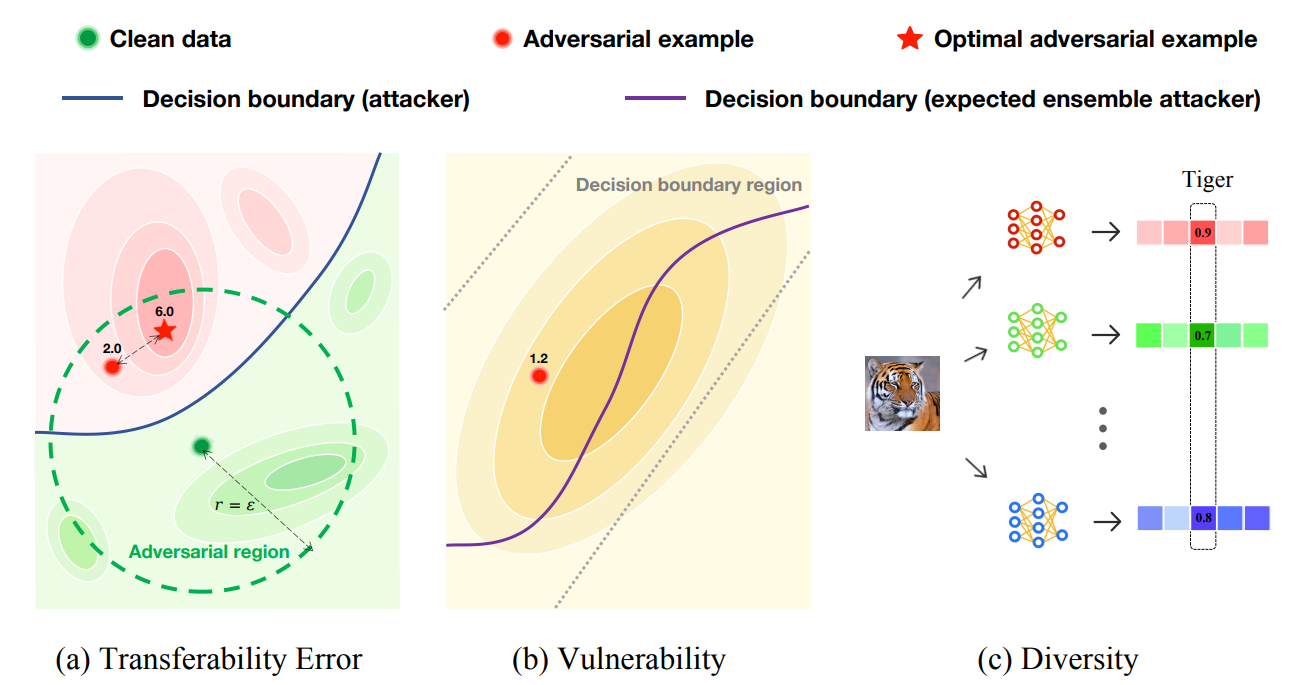

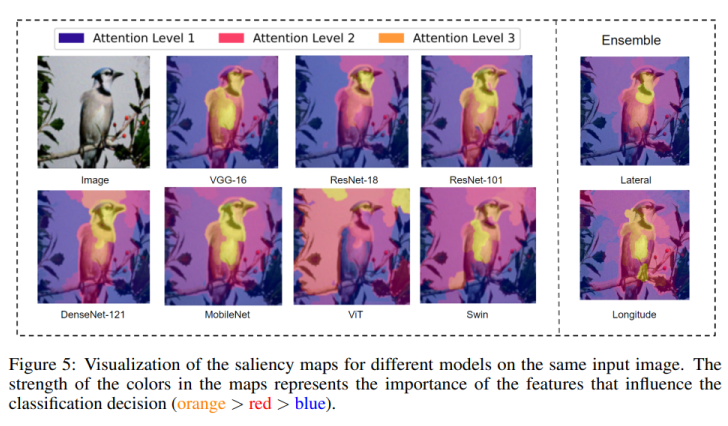

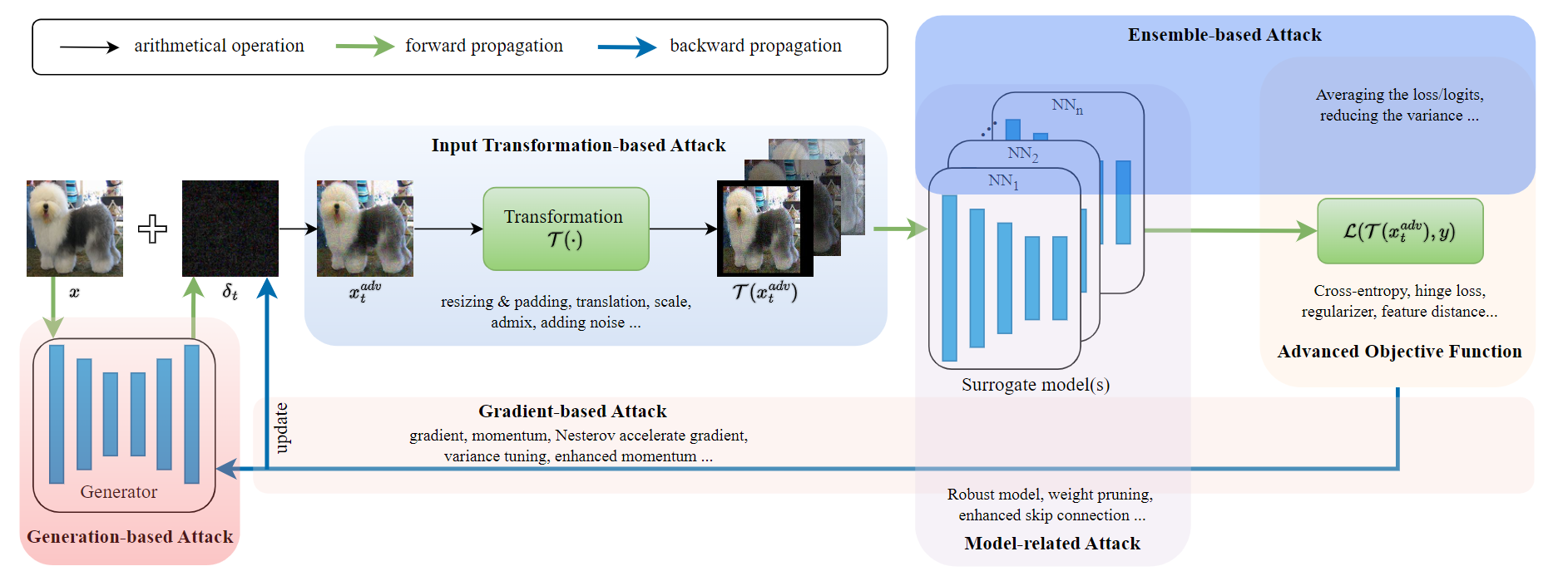

Wei Yao*, Zeliang Zhang*, Huayi Tang, Yong Liu. ICML, 2025 We provide early theoretical insights that serve as a roadmap for advancing model ensemble adversarial attack. |

|

Zeliang Zhang, Zhuo Liu, Mingqian Feng, Chenliang Xu. Findings of EMNLP, 2024 We empirically investigate the quantity bias in CLIP. |

|

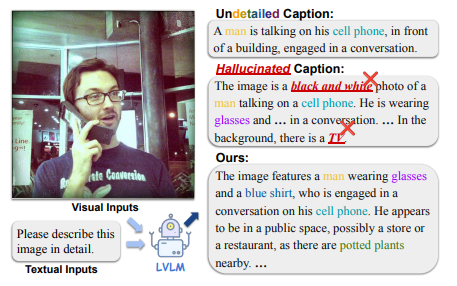

Mingqian Feng, Yunlong Tang, Zeliang Zhang, Chenliang Xu. Preprint, 2024 To alleviate the problem of hallucinations, we propose the Differentiated Beam Decoding (DBD), along with a reliable new set of evaluation metrics: CLIP-Precision, CLIP-Recall, and CLIP-F1. |

|

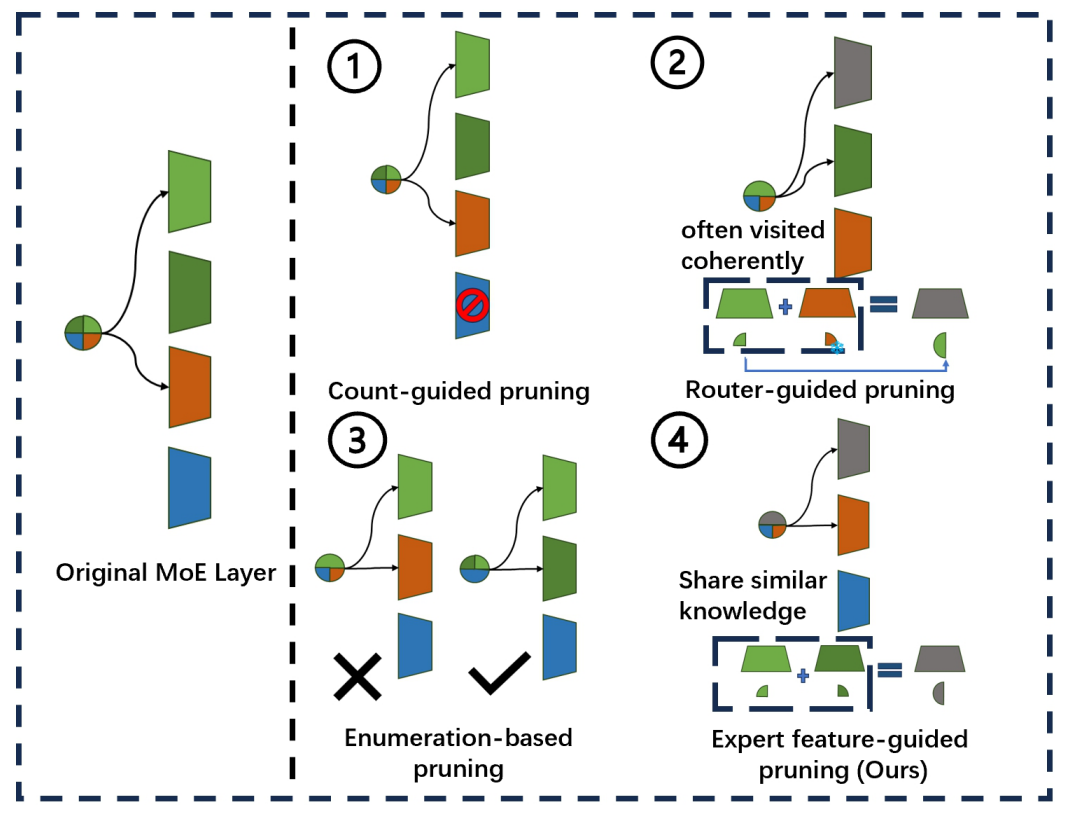

Zeliang Zhang, Xiaodong Liu, Hao Cheng, Chenliang Xu, Jianfeng Gao. ACL Findings, 2025 We propose a method of grouping and pruning similar experts to improve the model's parameter efficienc |

|

Yunlong Tang, Jing Bi, Siting Xu, Luchuan Song, Susan Liang, Teng Wang, Daoan Zhang, Jie An, Jingyang Lin, Rongyi Zhu, Ali Vosoughi, Chao Huang, Zeliang Zhang, Feng Zheng, Jianguo Zhang, Ping Luo, Jiebo Luo, Chenliang Xu. Technical Report, 2023 This survey provides a detailed overview of the recent advancements in video understanding harnessing the power of LLMs. |

|

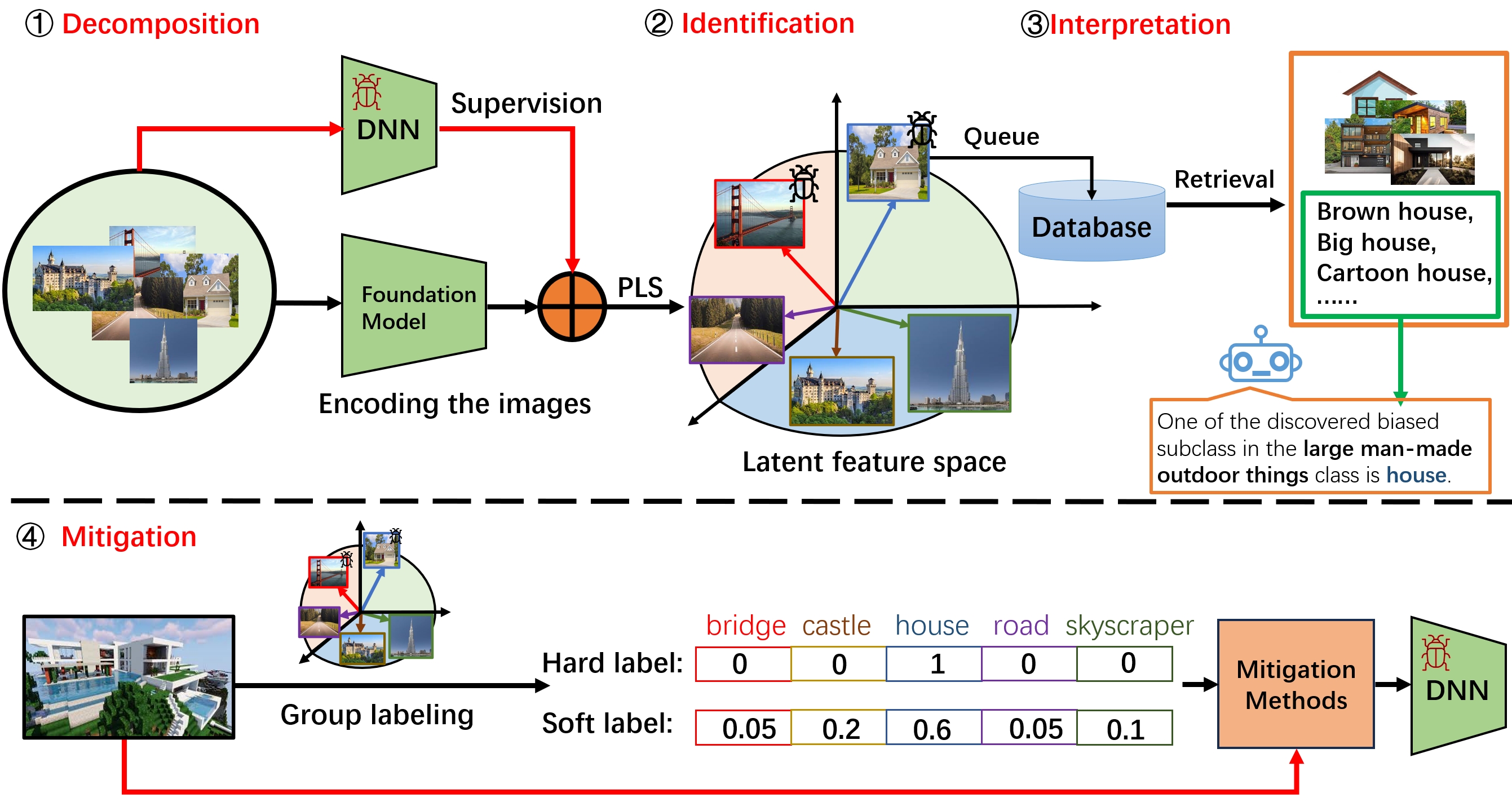

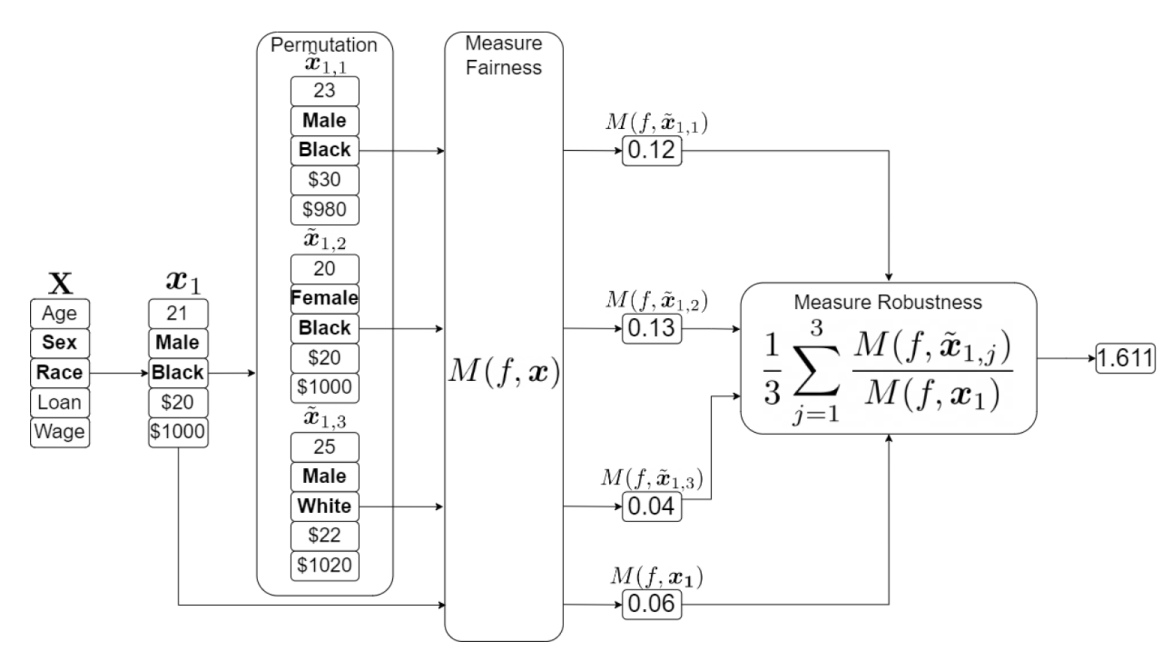

Zeliang Zhang*, Mingqian Feng*, Zhiheng Li, Chenliang Xu. CVPR, 2024 We propose a novel method, namely DII (decomposition, identification, and interpretation), to debug the multi-bias of the models. |

|

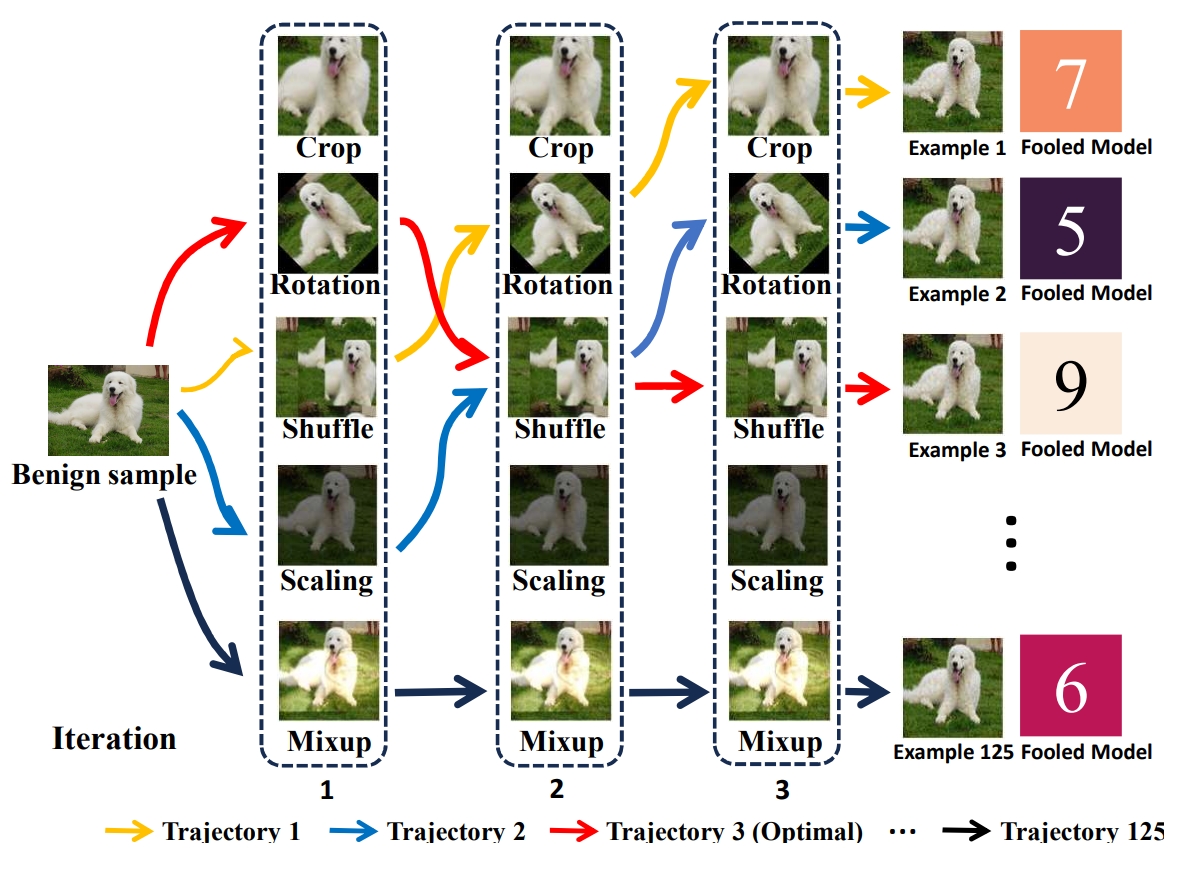

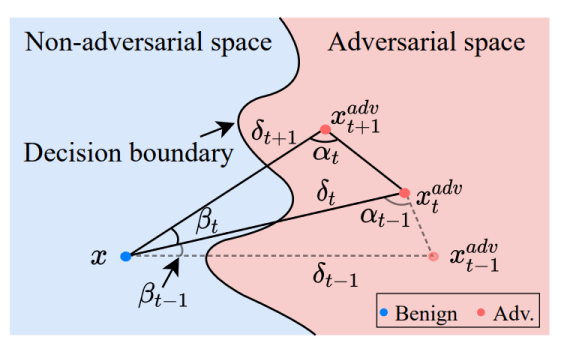

Rongyi Zhu*, Zeliang Zhang*‡, Susan Liang, Zhuo Liu, Chenliang Xu. CVPR, 2024 We propose a novel method, namely L2T (learn to transform), to boost the adversarial transferability. |

|

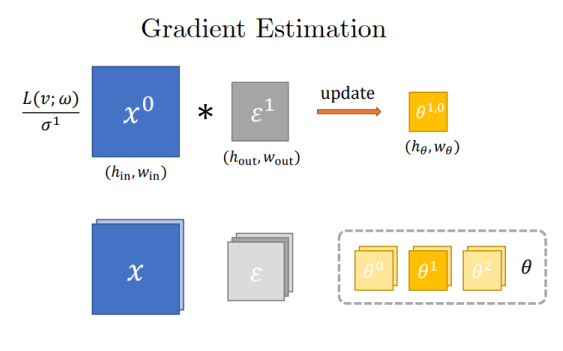

Jinyang Jiang*, Zeliang Zhang*, Chenliang Xu, Zhaofei Yu, Yijie Peng. ICLR, 2024 We explore the potential of Likelihood ratio method for gradient estimation and train multi-architectures of NN without back-propagation. |

|

Zeliang Zhang, Wei Yao, Xiaosen Wang Technical Report, 2024 We propose a bag of novel tricks to boost the adversarial transferability among different models. |

|

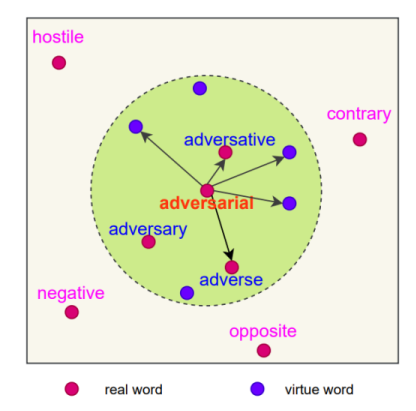

Zeliang Zhang*, Wei Yao*, Susan Liang, Chenliang Xu EACL Findings, 2024 We propose to treat the word substitution as a continuous perturbation on the word embedding representation for better robustness. |

|

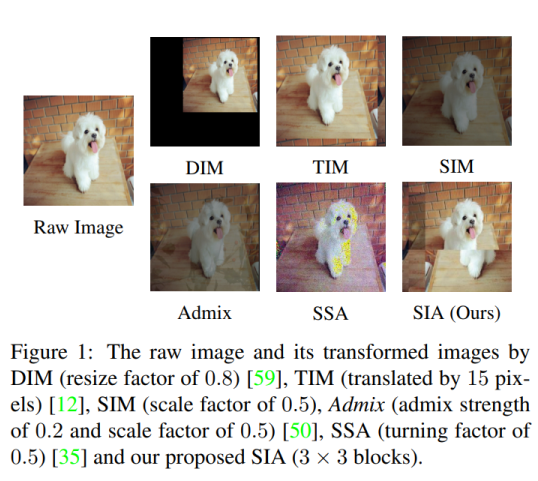

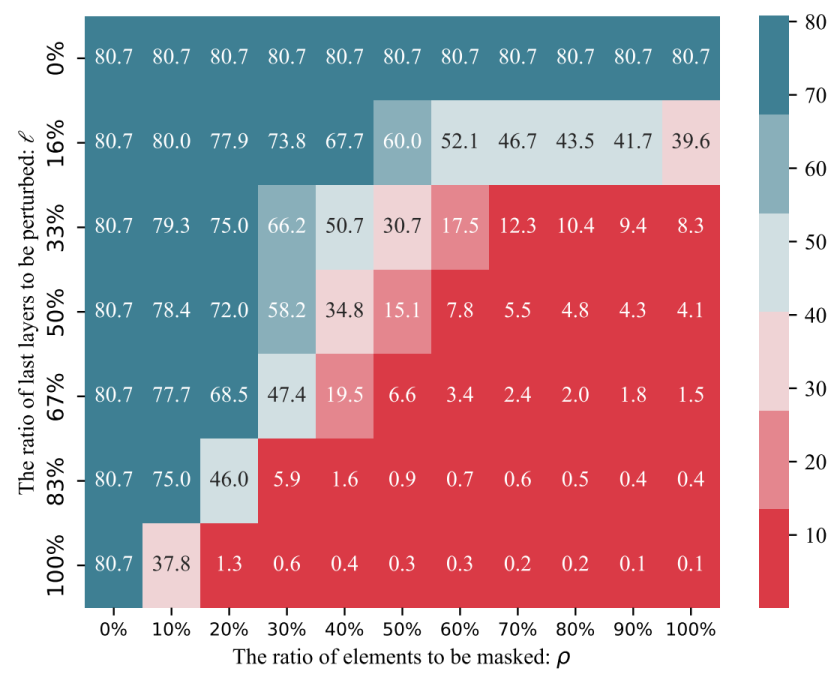

Xiaosen Wang, Zeliang Zhang, Jianping Zhang ICCV, 2023 We propose a novel input transformation based attack, called Structure Invariant Transformation (SIA), which applies a random image transformation onto each image block to craft a set of diverse images for gradient calculation. |

|

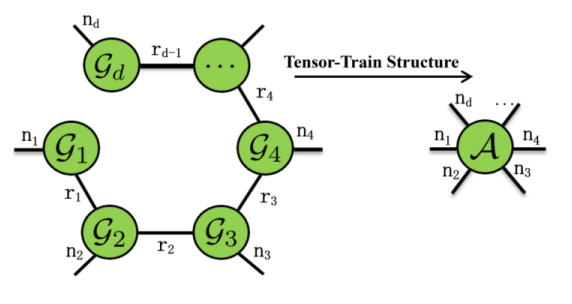

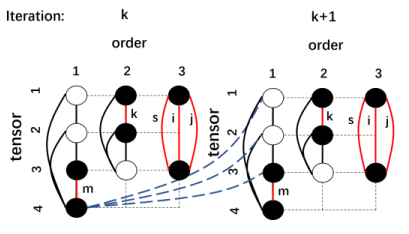

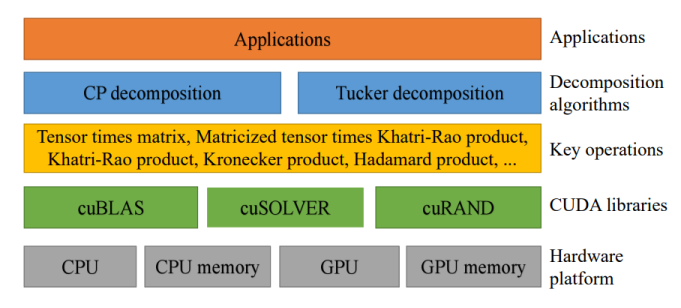

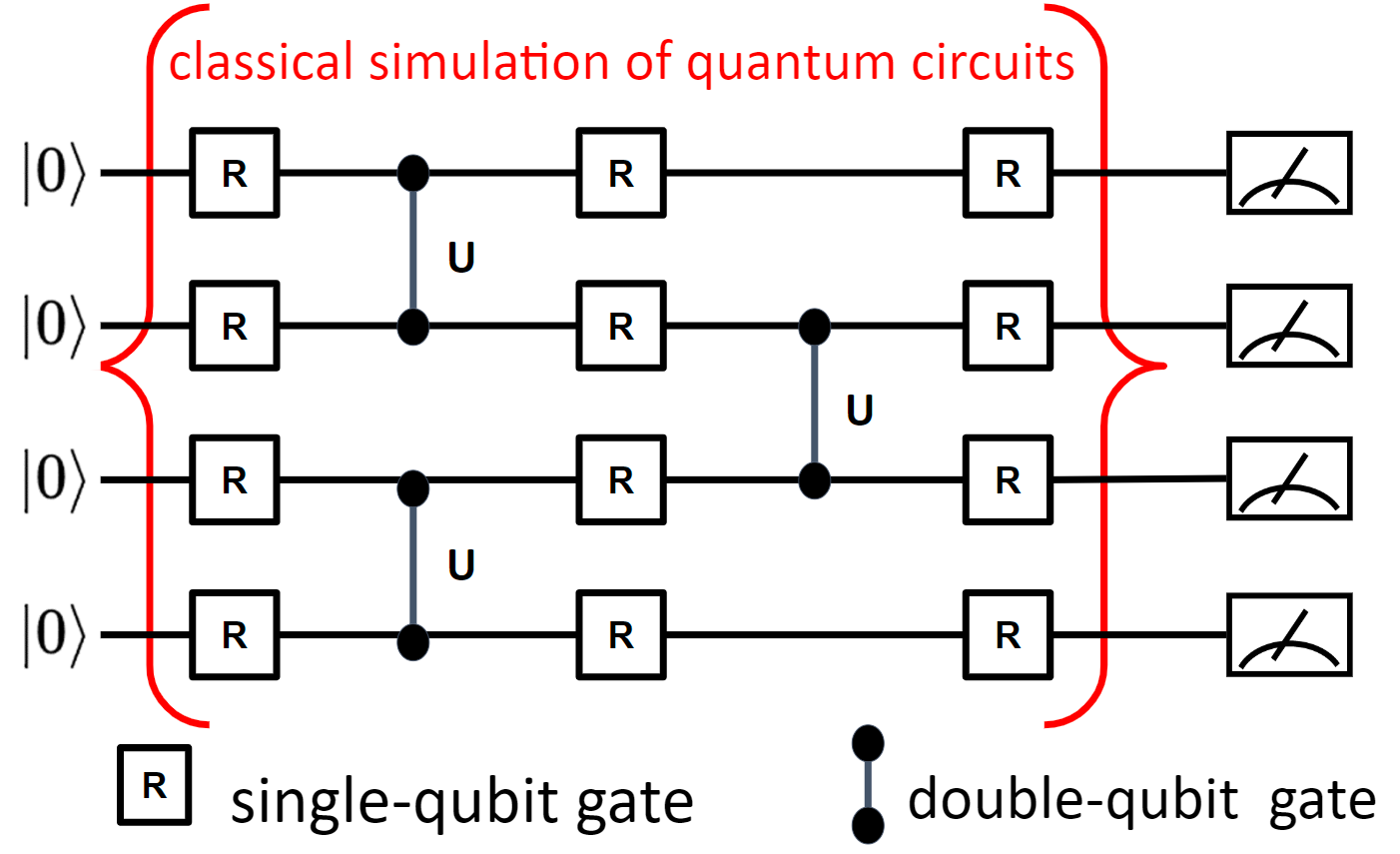

Xiao-Yang Liu, Hao Hong, Zeliang Zhang, Weiqing Tong, Xiaodong Wang, Anwar Walid IEEE Transaction on Computers, 2024 We present high-performance tensor-train primitives using GPU tensor cores and demonstrate three applications. |

|

Zhiyuan Wang*, Zeliang Zhang*, Siyuan Liang, Xiaosen Wang BMVC, 2023, oral We propose diversifying the high-level features (DHF) for more transferable adversarial examples. |

|

Edward Small, Wei Shao, Zeliang Zhang, Peihan Liu, Jeffrey Chan, Kacper Sokol, Flora Salim Data Mining and Knowledge Discovery, 2024 We quantitatively evaluate the robustness of fairness optimization strategies. |

|

Xiao-Yang Liu, Zeliang Zhang NeurIPS dataset and benchmark track, 2023 We develop a dozen of massively parallel environments to simulate quantum circuits. We open-source our parallel gym environments and benchmarks. |

|

Xiao-Yang Liu*, Zeliang Zhang*, Zhiyuan Wang, Han Lu, Xiaodong Wang, Anwar Walid. IEEE Transaction on Computers, 2022 We propose novel hardware-oriented optimization strategies for tensor learning primitives on GPU tensor cores. |

|

Xiaosen Wang, Zeliang Zhang, Kangheng Tong, Dihong Gong, Kun He, Zhifeng Li, Wei Liu ECCV, 2022 We propose a novel Triangle Attack (TA) to optimize the perturbation by utilizing the geometric information that the longer side is always opposite the larger angle in any triangle. |

|

Li Xiao, Zeliang Zhang, Yijie Peng Short paper: CASE, 2022; Long paper: T-ASE, 2024 We propose a new technique to compute the pathwise stochastic gradient estimate with respect to the standard deviation of the Gaussian noise added to each neuron of the ANN. |

|

|

|

University of Rochester , NY, USA

Ph.D. in Computer Science Sep. 2022 - Present Advisor: Chenliang Xu |

|

Huazhong University of Science and Technology, Wuhan, China

B.Eng in Computer Science and Technology Sept. 2018 - Jun. 2022 |

|

|

|

Microsoft Research , Redmond, US

Research intern, then part-time researcher May 2025 - Dec 2025 Advisor: Xiaodong Liu and Hao Cheng Work on efficient training and inference of reasoning language models. |

|

Microsoft Research , Redmond, US

Research intern, then part-time researcher May 2024 - Nov. 2024 Advisor: Xiaodong Liu and Hao Cheng Work on efficient training and inference of language models. |

|

Microsoft Research Asia , Beijing, China

Research intern Oct. 2021 - Jun. 2022 Advisor: Xinran Wei Work on high-performance computation of DFT, which is important/bottleneck in material design using AI. |

|

Columbia University , NYC, US

Research assistant Feb. 2020 - Dec. 2022 Advisor: Xiao-Yang Liu Work on high-performance tensor computation using GPUs and publish two workshop papers on top conference, namely the "Trillion-Tensor: Trillion-Scale CP Tensor Decomposition" at IJCAI 2020 TNRML workshop and "Parallel TTr1-Tensor: Randomized Compression-based Scheme for Tensor Train Rank-1 Decomposition" at NIPS 2020 QTNML workshop. |

|

|

|

|

|

|

|

|

|

The template is based on Jon Barron's website.

|